Zenseact celebrates four accepted research papers at prestigious AI conference

Zenseact, a fast-growing AI company developing car safety software, is thrilled to announce the acceptance of four pioneering research papers to the highly esteemed IEEE/CVF Computer Vision and Pattern Recognition Conference (CVPR), set to take place in Seattle this June.

4 min read

Gothenburg, 23 April. Zenseact, a fast-growing AI company developing car safety software, is thrilled to announce the acceptance of four pioneering research papers to the highly esteemed IEEE/CVF Computer Vision and Pattern Recognition Conference (CVPR), set to take place in Seattle this June.

The papers include three contributions to the main CVPR conference – one spotlighted as a “highlight” for its exceptional innovation, placing it among the top 3% of the 11,532 paper submissions – and one to the esteemed CVPR Workshop on Autonomous Driving. The acceptance of these papers underscores Zenseact’s important contributions to the fields of computer vision and deep learning. It also represents the company’s long-term efforts to develop world-leading car safety software.

Computer vision is a field that aims to enable computers to process and interpret visual data, like images and videos, in a manner similar to human vision. Deep learning, an essential machine learning component in modern computer vision, leverages artificial neural networks that digest and learn from extensive datasets, substantially improving the accuracy of tasks such as visual recognition. Thus, integrating computer vision and deep learning is essential for developing automotive software, enabling cars to navigate through intricate traffic situations by precisely recognizing and understanding their environment.

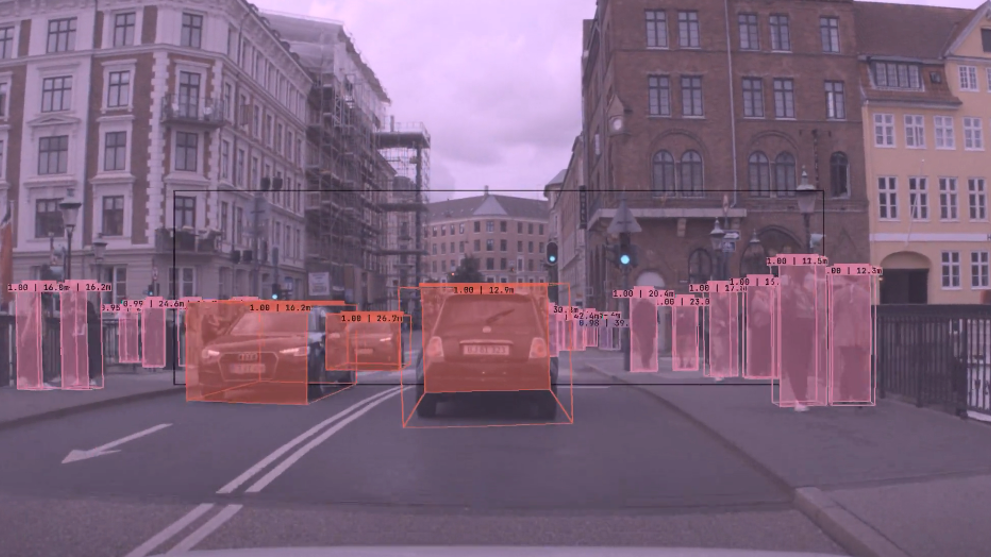

Zenseact’s contributions at CVPR address critical challenges in computer vision and autonomous driving. The first paper (1, below) introduces a state-of-the-art method for creating photorealistic simulations of traffic scenarios. This method can potentially make the testing and training of autonomous driving software significantly more cost-effective, comprehensive, and safe.

The second paper (2) addresses the problem of data leakage in online mapping. In this case, data leakage roughly refers to when a system accidentally learns about areas it’s going to be tested in, which can make it seem smarter than it actually is; it’s like taking your driving test on a route you’ve already trained on; you’ll succeed on the test but might not be prepared for driving on other roads. Zenseact’s paper suggests that using different geographic areas for training and testing helps better measure how well the mapping system works in places it hasn’t seen before.

The third paper (3) presents a new tool (a “vision transformer”) that can efficiently handle high-resolution, wide-angle fisheye images. These images are essential for various robotics applications, including autonomous driving. The new tool helps these systems better understand and react to their surroundings.

Lastly, the fourth paper (4) introduces approaches to ensure that AI models, trained to understand traffic environments, perceive real-world and simulated data in the same way. These approaches not only enhance the reliability of simulations but also boost the overall safety and robustness of autonomous systems in real-world scenarios, all while reducing costs.

Together, the four papers advance the safety, reliability, and scalability of autonomous driving technologies.

Behind Zenseact’s research success lies a steadfast dedication to improving traffic safety by developing next-generation car automation. Rooted in a supportive culture that acknowledges the value of research in a field evolving at breakneck speed, the company reaffirms its position as a significant contributor to the global automotive tech scene, demonstrating quality – and a competitive spirit.

“This is a great achievement that shows our research and innovation capabilities in computer vision and deep learning are of global caliber,” says Christoffer Petersson, Technical Expert in Deep Learning at Zenseact. “Our commitment to making cars safer by pushing forward with AI and computer vision technology is paying off.”

Located in the Lindholmen area of Gothenburg – a nexus of automotive innovation – Zenseact benefits from its proximity to car manufacturers, tech companies, and other artificial intelligence enterprises. This strategic position fuels the company’s research and solidifies its role as a significant player in driving the development of cutting-edge safety technology for cars.

“We’re perfectly positioned to push the boundaries of what’s possible,” Petersson continues. “Computer vision has long been foundational for automotive software, but the integration of deep learning has catalyzed unprecedented progress. At Zenseact, deep learning is not just another tool; it’s a core technology playing an increasingly central role in our research and product development. Coupled with our innovative researchers and engineers, this positions us ideally to achieve our mission of eliminating traffic accidents.”

This recognition at CVPR 2024 highlights Zenseact’s research excellence and dedication to contributing meaningful advancements to traffic safety. Zenseact’s overarching purpose is to save lives in traffic, requiring the company to be at the forefront of innovation.